-

Posts

1,262 -

Joined

-

Last visited

-

Days Won

119

Content Type

Profiles

Forums

Gallery

Downloads

Articles

Store

Blogs

Posts posted by the.rampage.rado

-

-

With your current working installation, decode the error message which can be found in var data = ' ...... '

And you will see what is causing the issue. -

13 hours ago, DRMasterChief said:

Good for customers? Time will tell.

In any case, a lot would have to change. The current model is no longer suitable for e-commerce today (from the retailers' perspective).

Good for the previous owner - they squeezed whatever they can and sold a shell...

-

1

1

-

-

Good for them...

-

Don't you consider this a vital secret for your shop? Aren't you afraid of competition tracing your business?

I think it's a big issue bug in the current code where it is leacked in FO urls. -

Is it possible for this module to emit the following items:

- current year - would be wonderful if we can activate the module in the footer of our theme or CMS page- shop name - if we have multistore and our CMS pages are the same, but we only want to switch the shop names

- shop URL - same

- contact email - point to our shop email in Shop Contacts, again useful in CMS pages

I know that the module currently does not support CMS pages but for future release,s I think it could be interesting proposal. -

Could you give me access to your shop to check on this issue?

-

17 minutes ago, cienislaw said:

Let's hope that there still will be someone to be surprised - my client is moving to WooCommerce.

What is the reason for this switch?

-

Merchants can always upload 1px transparent blank images if they only want graphics for only some of the categories.

-

1

1

-

-

54 minutes ago, wakabayashi said:

What does this mean?

Unfortunately I didn't find time to participate a lot on github recently. Hopefully I can change it in 2026. I have so much open Todos 😑 But AI is helping to get faster at solving stuff with code. 😊

The team is working on a surprise. When they are ready, it will be revealed. 🙂

-

1

1

-

-

36 minutes ago, DRMasterChief said:

Was any solution found for this? Or what triggered it?

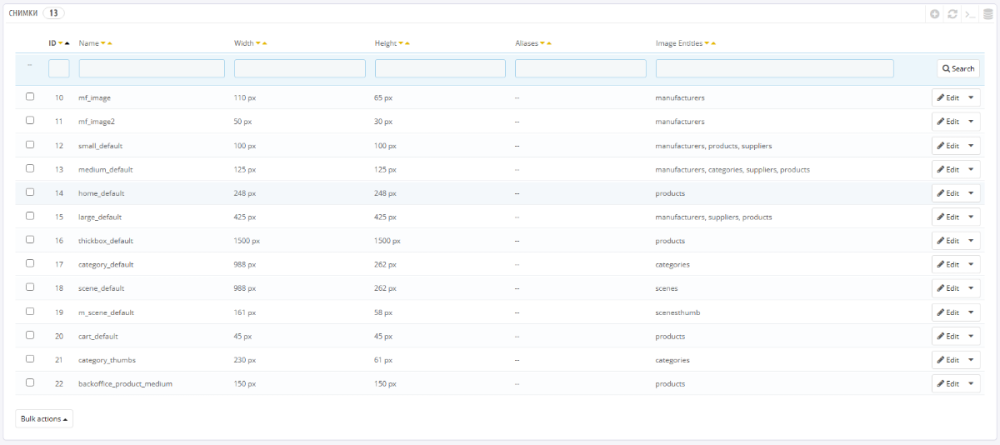

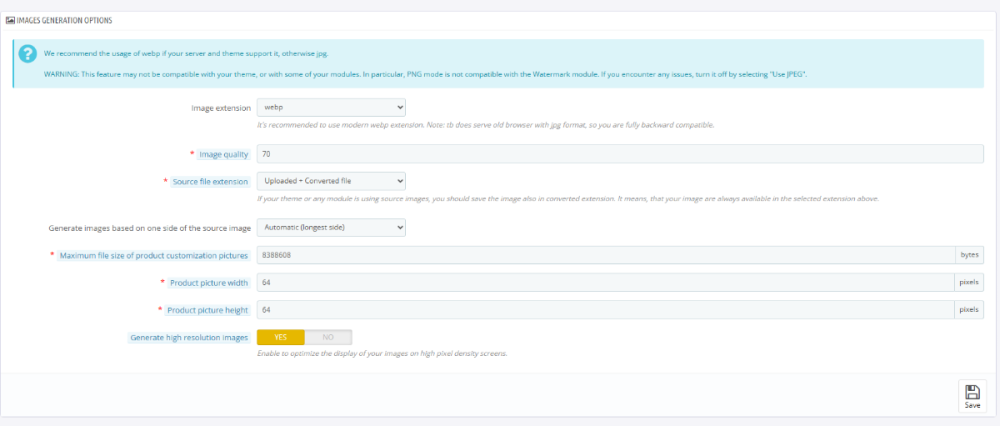

I'm experiencing a situation where, after updating to version 1.6, the thumbnails in the category previews are populated with the camera icon when there's no actual image.

Previously, nothing was displayed there at all, just a blank white space.

I have the .htaccess files from versions 1.5.1 and 1.6 for comparison, but of course, I'm stuck.

Did you follow the guide from page 1? All entities set correctly? Here is an example from Warehouse:

Have you regenerated all thumbnails with the new settings down the page:

Did you regenerate your htaccess and does it include the images as:

....

RewriteRule ^categories/([0-9]+)(\-[_a-zA-Z0-9\s-]*)?/.+?([2-4]x)?\.(avif|gif|jpeg|jpg|png|webp)$ %{ENV:REWRITEBASE}img/c/$1$2$3.$4 [L]

....

RewriteRule ^products/([0-9])([0-9])(\-[_a-zA-Z0-9\s-]*)?/.+?([2-4]x)?\.(avif|gif|jpeg|jpg|png|webp)$ %{ENV:REWRITEBASE}img/p/$1/$2/$1$2$3$4.$5 [L] and so on... -

Configure the entities first and after that if you're using custom theme it might be coded to use hardcoded links to the images, not the one supplied by the core. After the image rewrite the links are different.

-

1 hour ago, netamismb said:

I have the same situation when I tried to move to 1.6.

In 1.6 there was a rewrite of the whole images section thanks to @wakabayashi and @datakick.

In order to update to 1.6 and later you have to follow this guide in the BO -> Images:-

1

1

-

1

1

-

-

What is your experience with it? Did it sink your shops or you get lucky and win?

My shops experienced vast volatility during 11-14th and right after that a huge swing down. Now I'm like, at least 50% down in impressions.

All my vital keyword niches are messed up. It promoted shops that don't sell sporting goods but have a huge market in toys and junk items, it also boosted small, unknown sites that don't look professional at all.

Since 1st of January I don't see any further volatility, but I'm stuck on those lower impression levels. "Luckily," January is always a low point for me during the year and I'm hoping a small update will pass around the end of the month.

Of course - no blackhat stuff, all links are natural, nothing bad is made SEO wise. Simply a low number of external links and Google thinks you no longer need this first place and moves you to 38th position.

What is your experience with it?-

1

1

-

-

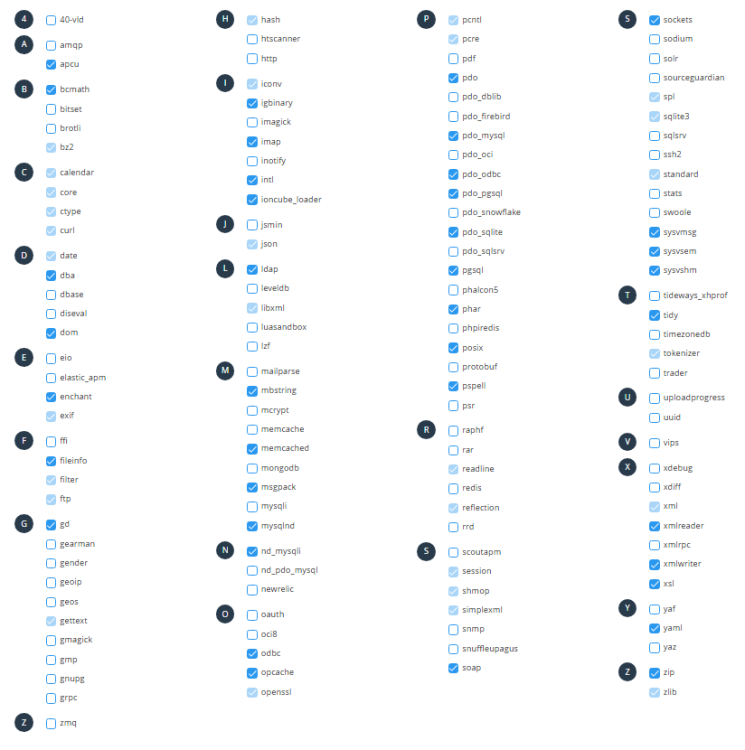

If you build your install for 8.1 and any third party modules are running on it it's not big trouble. thirty bees is stable on 8.1 since years.

-

-

You have to run pdo_mysql.

In order to quickly view if you do access AdminInformation controller in BO and append "&display=phpinfo" (no quotes), this will launch phpinfo where you can check if it's running.

If not - turn it on and check again. -

It's active. Go through the branches and see what is cooking underneath. 🙂

And there are other BIG projects that are not on github but they took long time to develop.-

2

2

-

-

There is a bug in thirty bees or the core updater that not every time the update runs define('_TB_BUILD_PHP_', '8.1'); in settings.inc.php is updated.

It is not critical and you can manually edit this if you're sure the update propagated and your shop is running OK.

This isssue was spotted a while back and @datakick advised that it can be fixed that way but nobody tracked down the issue so it's still present for some users in some scenarios.-

1

1

-

-

Currently edge has no pressing bugs (in fact it has quite lot of work done since 1.6 in terms of bugs and small new features).

If you have a test installation I would strongly recommend updating there to 1.7, see if it's working with php 8.3 (it should work but third party themes and modules might have a problem) and then update your production after you've made a working backup of it (files structure and db).

I use edge on my production and I can't remember when was the last newly introduced but I noticed in my daily work.

But thirty bees 1.6 would also work on php 8.3 (with few more deprecation warnings which should not be conserning) so if you only want to make your install work on php 8.3 I would recommend to play with only one variable - update your current store and make it working robustly on 8.3 and only then update it to edge if you see any reason. -

Could you try and follow this procedure and tell us if it makes any change.

At this very moment if you're updating to version 1.6, the same version should be in your BO.

If you update to edge channel your BO should show 1.7 (which is currently not released as final version).

When you switch between php versions you update only dependencies, the core is not updated.

-

1 hour ago, Ian Ashton said:

I did have the No Captcha reCAPTCHA Module installed BUT not activated for Registration - so I've now done that. Many thanks.

What's your take on Cloudflare+Turnstile ? Do You think Turnstile will appear in Thirty Bees in the future?

The module in github from above is for thirty bees and it works flawlessly.

-

1

1

-

-

Not all stores use google accounts. 🙂

Turnstile is separate service from Cloudflare. There is no need for your store to be behind it to use Turnstile. Yes, account with them is needed but I advised for this module as it offers smoother flow for the real customers imho.-

1

1

-

-

https://github.com/eschiendorfer/genzo_turnstile

Do you have this module for turnstile in FO? If not, I highly recommend installing it and checking if the bots will stop. -

As I said in the SQL manager, you are using there are forbidden statements or nested queries, etc.

The export functionality in Customers is prepared query that does not run through thirty bees' SQL manager.

As DRMasterChief said - if you have tool at hosting level similar to phpMyAdmin and you're confortable using it - do so but always keep a fresh copy of your db so not to end in a bad situation (only one deleted column in this table can brick your shop).

You want a list containing those columns?

SELECT c.id_customer, c.id_shop_group, c.id_lang, c.firstname, c.lastname, c.email, c.newsletter, c.date_add FROM ps_customer c;

Of course edit the table prefix to match yours.

Product - Features

in Technical help

Posted

There is no sync script already baked in the platform.

You would have to go and manually change those.

In general if certain product has no option to change certain parameter - use features. If it can be changed - use attributes. Later in Layered Navigation module you can apply filtering both by features and attributes if you need.