-

Posts

400 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Gallery

Downloads

Articles

Store

Blogs

Everything posted by Beeta

-

Is someone receiving customers from from ai chats? How do you track them? Do you notice this? Is someone already using it? https://openai.com/index/buy-it-in-chatgpt/ https://www.agenticcommerce.dev/

-

Table 'tb_layered_product_attribute' doesn't exist in engine

Beeta replied to Beeta's question in Technical help

I solved! Even if the module was already in the latest version I solved downloading it from the marketplace and overwriting it (without uninstalling it). -

I would like to be sure that datakick is aware of the behaviour of his module (I have it licensed). @datakick can you confirm that? Thank you @the.rampage.rado for the info about Tidy but I would like to use it only as last resort. I can't be sure if TB is going to be supported in the long term from a 3rd party dev. @musicmaster prestools can do the same thing right?

-

Because is the opposite I want to do. In @datakick's module I have a cronjob that imports new products (images included) every hour. The products imported may have or not have images. I have another import job that update only the images of the last 48h "new products imported" because the source very often adds/changes images after 24/48 hours from their creation. So usually a product imported "now" is going to get updated 48 times during the next 48h (only replacing its image). If the product have already an image the image get updated 48 times and I noticed that if this happen I confirm (as I monitored the filesystem) that import mode named "replace existing images" imports the image again as I want, replaces the product image as I want but leaves the old image in the filesystem and I think this is the bug that is filling my volumes. This happens also in a third cronjobs where I put back on stock products that was outofstock and meanwhile I update price and images too because sometime the source from I'm getting products change prices and images.

-

the issue is about importing again images over existing products, re-import images. About the tidy module AFAIK TB devs changed something in the image management and using PS modules for image cleaning was not suggested.

-

Hello I'm using datakick module to hourly import products. I noticed that the img/p directory contains more than 1,5 million files, I think they are a little too much. This shop have 42k products (12k in stock) and 99,99% of the products have a single image Right now image/p is huge, it contains 1.526.743 files. Products (with their images) are imported automatically every hour with @datakick's module v2.1.9. The issue I think is caused because every hour one of the import jobs does also an image re-import of: products that don't have an image - because the source sometime add images after some hours products imported during the last 2 days - because the source add/change images after 24/36 hours. I'm using import mode: replace existing images. I monitored the number of files and I noticed that they change even if the import job is not importing new products. I monitored a specific product and I confirm that import mode "replace existing images" import the image again as I want, replace the product image but the old image is still in the filesystem. Is it the right behaviour or a bug? If it's not a bug have you some suggestion on how to clean the img/p dir from duplicates? thank you

-

is this possibile form manufacturers too? I would like to add info for GPSR. I would like to add them to the manufacturer table because in this way modules like prestabay can pull those info and use them on ebay too without duplicating data in the db

-

error 500 after update to 4477865bf9bcf43521003635ce3cd2bc7e5bb3ba

Beeta replied to Beeta's question in Technical help

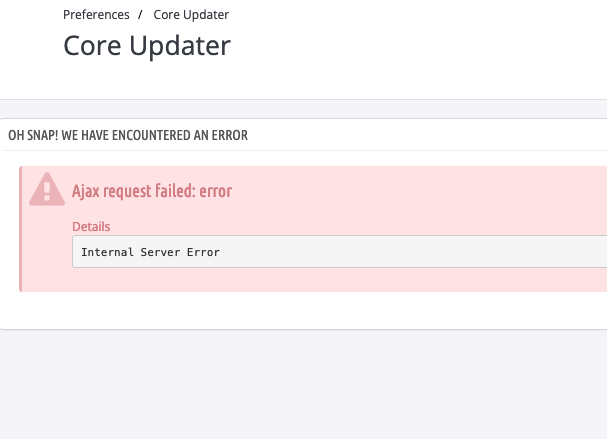

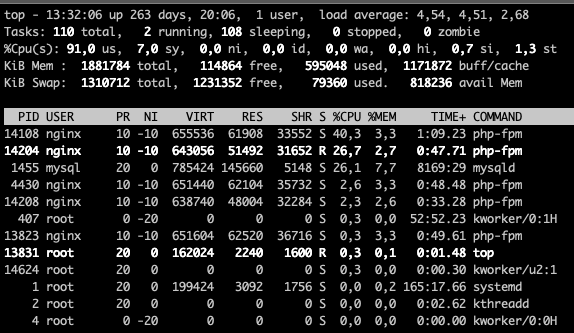

as I can't find anything specific in the log I'm restoring the last backup (daily) and then try the update again. Trying again after the snapshot restore and Core Updater gave me Internal Server Error again: I found this on nginx access log (nothing in nginx error log): The last two I think was me reloading the page o something similar. Have you any idee where can I search for more information? Right now I'm going to restore the last snapshot and postpone the update -

error 500 after update to 4477865bf9bcf43521003635ce3cd2bc7e5bb3ba

Beeta posted a question in Technical help

Hello I'm getting a similar problem as in this topic. plus images of the products in the homepage seams gone. and in the backend I’m getting errors about the price tab During the the update the process fialed with "internal server error". I have db fixes to do but I can't, clicking fix toapply fix throw for example: Oh snap! We have encountered an error Unknown column 'width' in 'tb_product_attribute' Details Unknown column 'width' in 'tb_product_attribute' Now I have the front-end product pages throwing 500 this error: ThirtyBeesDatabaseException Unknown column 'product_attribute_shop.width' in 'field list' in file classes/Product.php at line 6265 SQL SELECT ag.`id_attribute_group`, ag.`is_color_group`, agl.`name` AS group_name, agl.`public_name` AS public_group_name, a.`id_attribute`, al.`name` AS attribute_name, a.`color` AS attribute_color, product_attribute_shop.`id_product_attribute`, IFNULL(stock.quantity, 0) AS quantity, product_attribute_shop.`price`, product_attribute_shop.`ecotax`, product_attribute_shop.`weight`, product_attribute_shop.`default_on`, pa.`reference`, product_attribute_shop.`unit_price_impact`, product_attribute_shop.`minimal_quantity`, product_attribute_shop.`available_date`, ag.`group_type`, product_attribute_shop.`width`, product_attribute_shop.`height`, product_attribute_shop.`depth` FROM `tb_product_attribute` pa INNER JOIN tb_product_attribute_shop product_attribute_shop ON (product_attribute_shop.id_product_attribute = pa.id_product_attribute AND product_attribute_shop.id_shop = 1) LEFT JOIN tb_stock_available stock ON (stock.id_product = pa.id_product AND stock.id_product_attribute = IFNULL(`pa`.id_product_attribute, 0) AND stock.id_shop = 1 AND stock.id_shop_group = 0 ) LEFT JOIN `tb_product_attribute_combination` pac ON (pac.`id_product_attribute` = pa.`id_product_attribute`) LEFT JOIN `tb_attribute` a ON (a.`id_attribute` = pac.`id_attribute`) LEFT JOIN `tb_attribute_group` ag ON (ag.`id_attribute_group` = a.`id_attribute_group`) LEFT JOIN `tb_attribute_lang` al ON (a.`id_attribute` = al.`id_attribute`) LEFT JOIN `tb_attribute_group_lang` agl ON (ag.`id_attribute_group` = agl.`id_attribute_group`) INNER JOIN tb_attribute_shop attribute_shop ON (attribute_shop.id_attribute = a.id_attribute AND attribute_shop.id_shop = 1) WHERE pa.`id_product` = 42002 AND al.`id_lang` = 2 AND agl.`id_lang` = 2 GROUP BY id_attribute_group, id_product_attribute ORDER BY ag.`position` ASC, a.`position` ASC, agl.`name` ASC Source file: classes/Product.php 6246: IFNULL(stock.quantity, 0) AS quantity, product_attribute_shop.`price`, product_attribute_shop.`ecotax`, product_attribute_shop.`weight`, 6247: product_attribute_shop.`default_on`, pa.`reference`, product_attribute_shop.`unit_price_impact`, 6248: product_attribute_shop.`minimal_quantity`, product_attribute_shop.`available_date`, ag.`group_type`, 6249: product_attribute_shop.`width`, product_attribute_shop.`height`, product_attribute_shop.`depth` 6250: FROM `'._DB_PREFIX_.'product_attribute` pa 6251: '.Shop::addSqlAssociation('product_attribute', 'pa').' 6252: '.static::sqlStock('pa', 'pa').' 6253: LEFT JOIN `'._DB_PREFIX_.'product_attribute_combination` pac ON (pac.`id_product_attribute` = pa.`id_product_attribute`) 6254: LEFT JOIN `'._DB_PREFIX_.'attribute` a ON (a.`id_attribute` = pac.`id_attribute`) 6255: LEFT JOIN `'._DB_PREFIX_.'attribute_group` ag ON (ag.`id_attribute_group` = a.`id_attribute_group`) 6256: LEFT JOIN `'._DB_PREFIX_.'attribute_lang` al ON (a.`id_attribute` = al.`id_attribute`) 6257: LEFT JOIN `'._DB_PREFIX_.'attribute_group_lang` agl ON (ag.`id_attribute_group` = agl.`id_attribute_group`) 6258: '.Shop::addSqlAssociation('attribute', 'a').' 6259: WHERE pa.`id_product` = '.(int) $this->id.' 6260: AND al.`id_lang` = '.(int) $idLang.' 6261: AND agl.`id_lang` = '.(int) $idLang.' 6262: GROUP BY id_attribute_group, id_product_attribute 6263: ORDER BY ag.`position` ASC, a.`position` ASC, agl.`name` ASC'; 6264: 6265: return Db::readOnly()->getArray($sql); 6266: } 6267: 6268: /** 6269: * Get product accessories 6270: * 6271: * @param int $idLang Language id 6272: * @param bool $active 6273: * 6274: * @return array|false Product accessories 6275: * Stack trace PHP version: 7.4.33. Code revision: bf2fe6fcbed5b2fc29504ca4a0ac044705bcc1bf build for PHP 7.4 -

running out of inodes... [11:48][[email protected] tmp]# ls /home/nginx/domains/xxxx.xxx/public/img/tmp | wc -l 27886 [11:48][[email protected] tmp]# df -i /dev/sda 3260416 3251877 8539 100% / is it safe to delete all the content of img/tmp (without touching index.php)?

-

I got a prestashop 1.6.1.24 (now it seams cleaned) with the same problem, blm issue right? @vsn can you list the modules you are using with this thirtybees? Maybe if it's not a ps/tb code problem we can match the unsecure module. @for all or do you know for sure that is the infamous "2022 code injection" issue and have nothing to do with ps/tb code?

-

Cache was off from the beginning (and I deleted just to be sure) already tried with different devices in incognito

-

I have inodes and disk space issues, so I decided to delete generated thumbnails to be able to work. The thing surprised me is that once deleted thumbnails I still view them in the front office, the only place I noticed they are gone is in the image tab of the product page (backoffice). Is it normal?

-

I solved going directly here: https://myaccount.google.com/apppasswords (from the google account gui the link is not available) and adding again the app password (I don't know why was deleted). But I think that app passwords are going to not work anymore in the near future. p.s. I found the link to apppasswords here: https://support.google.com/mail/thread/267471964/app-password-not-listed-as-an-available-option?hl=en

-

Now that Gmail app password are gone Google Workspace is not sending mails anymore. Maybe implementing this could solve the problem for Google Workspace too?

-

Thank you now it’s cleared. I already read about the keep js and css options. But with cache disabled I was thinking that I didn’t need to disable other cache options. is the Google cache still a thing? I commented in github (here) about the possibility to limit cache is js and css for XX days or XX size.

-

is this still a thing? I have the cache disabled but 5GB of CSS and JS files in my community theme cache folder.

-

Table 'tb_layered_product_attribute' doesn't exist in engine

Beeta replied to Beeta's question in Technical help

I digged a bit. The tb_layered_product_attribute.ibd file is missing. The stange thing is that blacklayered filter is still working. I think the only thing to do is recover the table from a backup and do a full recover in a new database. -

Table 'tb_layered_product_attribute' doesn't exist in engine

Beeta posted a question in Technical help

I'm getting this error/warning during the product save. I noticed only now, can be possibile it shows up after uninstalling panda theme? is it a core table? -

nginx configuration for thirtybees

Beeta commented on datakick's blog entry in Datakick's Tips and Tricks

Another suggestion is to use a cronjob to update ip list from cloudflare. In my case: I use centminmod framework in the vps and I just learned that it have by default a script installed that update nginx conf with latest ip list: You can take a look at it here: https://community.centminmod.com/threads/csfcf-sh-automate-cloudflare-nginx-csf-firewall-setups.6241/ You can see the script (csfcf.sh) here: https://github.com/centminmod/centminmod/tree/master/tools Cronjob run the script, the script update /usr/local/nginx/conf/cloudflare.conf included in the main nginx conf by default (commented): #include /usr/local/nginx/conf/cloudflare.conf; So in my case I added the cronjob and uncommented the include row in the main nginx conf. That's it. Thank you George Liu (eva2000)! ^_^ -

nginx configuration for thirtybees

Beeta commented on datakick's blog entry in Datakick's Tips and Tricks

@datakickCloudflare added some ipv4 classes (see below). Do you reccomended to add them to the nginx configuration too? 173.245.48.0/20 103.21.244.0/22 103.22.200.0/22 103.31.4.0/22 141.101.64.0/18 108.162.192.0/18 190.93.240.0/20 188.114.96.0/20 197.234.240.0/22 198.41.128.0/17 162.158.0.0/15 104.16.0.0/13 104.24.0.0/14 172.64.0.0/13 131.0.72.0/22 source: https://www.cloudflare.com/ips-v4 -

Sometimes some Hong-Kong customers show up. I think I’m going to use cloudflare. If I’m not wrong the anti ddos is included in the free version.

-

Is there a way to stop bots from flooding the stats?

Beeta replied to movieseals's question in Technical help

same issue here: they slow down the website, I'm getting up to 600 at the same time. I already have Blackhole for Bad Bots v1.0.1 - by DataKick installed and active. -

I'm getting many many visitors on my shop and it not make any sense. I'm on bleeding edge 6638c53f7f3f1be43ccd08657c6f81fec0081f74. I think they are bots and they are slowing down the server like a mini ddos. examples: 1493615 148.66.20.58 13:22:37 - None 1493614 52.128.247.82 13:22:36 - None 1493613 148.66.20.58 13:22:35 - None 1493612 154.12.38.158 13:22:34 - None 1493608 182.16.34.234 13:22:33 - None 1493609 148.66.22.226 13:22:33 - None 1493610 148.66.3.194 13:22:33 - None 1493611 154.12.38.198 13:22:33 - None 1493607 154.12.58.225 13:22:32 - None 1493605 112.121.172.98 13:22:30 - None 1493606 154.12.52.225 13:22:30 - None 1493603 154.12.52.225 13:22:29 - None 1493604 182.16.34.234 13:22:29 - None how can I limit them?

-

this time I had to recreate schedules as even re-save them didn't make them run again even if next scheduled time column was updated.