-

Posts

3,134 -

Joined

-

Last visited

-

Days Won

494

Content Type

Profiles

Forums

Gallery

Downloads

Articles

Store

Blogs

Everything posted by datakick

-

Introducing Contact Form IP Address Blocker module

datakick replied to datakick's topic in Contact Form IP Address Blocker

I would be a little afraid about blocking regular customers by mistake, but it could be easily done for sure. -

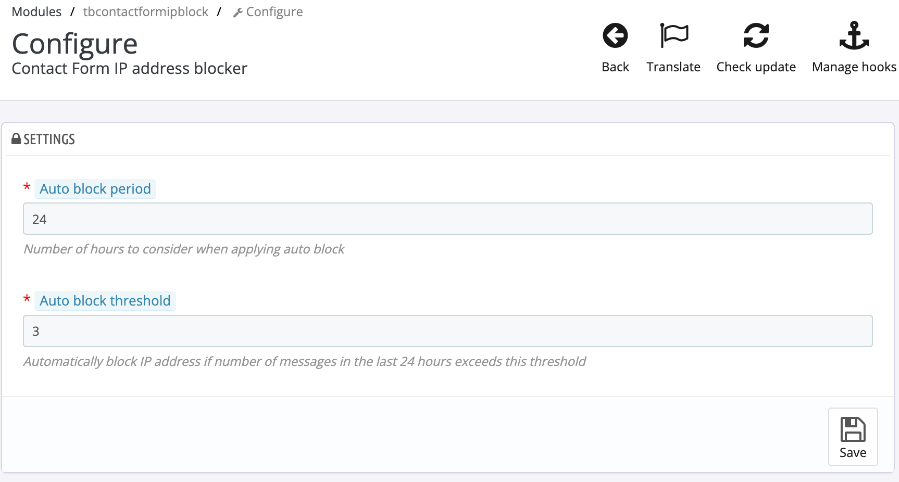

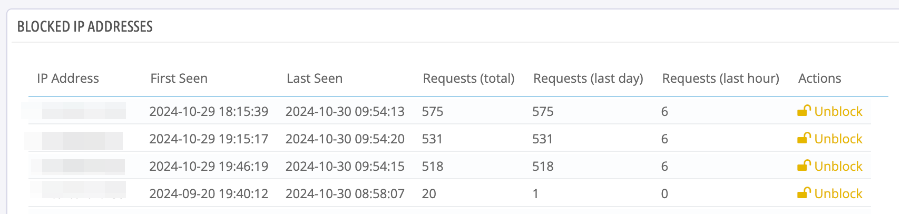

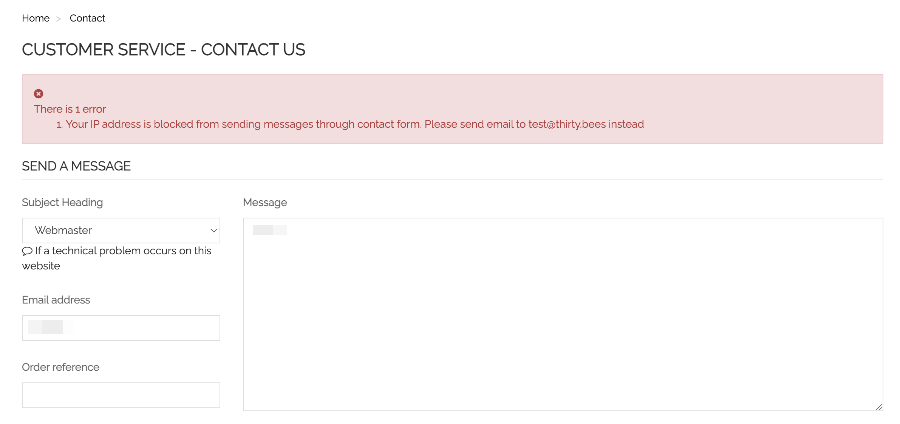

Hi everyone, we are happy to announce new premium module: Contact Form IP Address Blocker module First of all - this module only works on thirty bees version 1.6.0 -- if your store is not on this version, you will need to update first. Thirty bees 1.6.0 introduced new hook that allows modules to filter contact form messages. This Contact Form IP Address Blocker module use this hook to block sending messages based on IP address. It collects statistics for every IP address -- how many times it send/attempted to send contact form message. This information is shown to administrator, and they can ban IP addresses of spammers. There is also an option to automatically ban IP addresses after they send N messages in the last H hours (where both N and H can be configured) Note that the ban is for sending contact form messages only. So, if you ban somebody by mistake, they can still use your site, they just can't send you contact form message -- they are asked to send email instead. I hope you will like this new addition to our of premium modules.

-

Invoice number in emails by updating classes/module/PaymentModule.php

datakick replied to 30knees's question in Technical help

OrderHistory https://github.com/thirtybees/thirtybees/blob/0913a2fa58cfd7db1e1f9ac33b99389777edba78/classes/order/OrderHistory.php#L480 Sure, we would gladly accept PR. Extra parameters passed to email templates are fine, as long as it doesn't cause errors or performance issues. -

Invoice number in emails by updating classes/module/PaymentModule.php

datakick replied to 30knees's question in Technical help

This class handles sending of order_conf and voucher emails. So if you want to extend those email templates, then it's the right place to modify. It has no impact on order status emails, like bankwire, though. -

urgent No longer getting Order confirmation emails?

datakick replied to bhtoys's question in Technical help

In 1.1.0, the test mail functionality does not use standard Mail::Send -- there was a dedicated method in Mail Controller that simply attempted to send email using provided credentials. If this works, but no other email works, then the issue will be somewhere in Mail::Send. You are saying that nothing has changed. I don't believe that -- something must have changed, otherwise it would continue to work. It doesn't mean that you did any changes, though. For example, it's possible that your site was hacked, and some core files were modified, breaking some functionality. Or your hosting provider may have changed php version, or upgraded the mysql server. -

urgent No longer getting Order confirmation emails?

datakick replied to bhtoys's question in Technical help

What exactly do you mean by 'Order confirmation' emails -- do you mean email sent to customer, or to merchant? If you are talking about merchant email, then it will be issue with your mailalerts module. My guess is that the email is not correctly translated to your language. Some older thirty bees version (for example 1.1.0 🙂 ) contained a bug that created empty email templates in your /themes/<theme>/modules/<module>/mails/<lang>/ directory when you opened Localization > Translations > Email Translations. Check the path for mailalerts module, and verify that there are no files with zero size. Delete them if they exists. Check if the email is logged as sent in Advanced Parameters > Emails. If it's listed there, it's not a thirty bees problem, but issue with your mail server. -

I suggest you extract these as overrides into a custom module. That way you, can easily transfer the same functionality between installations (for example to your test env), and the changes are nicely attributed to your module when listing overrides in 'overridecheck' module. It should be fairy easy task, my estimate is 1-2hours of work at most. We are happy to help you with this you can buy adhoc support time or you can purchase one of the supporter plans that come with 1 or 2 hours of support monthly. You can use this time for anything -- store update, custom dev,...

-

It's on a hold right now, as I don't have free capacity to work on it. Still I'd like to finish this

-

[solved] I can't get phpmail sending - host Hetzner

datakick replied to Pedalman's question in Technical help

Just to be sure - did you set this module to be your mail transport? If so, you could try to misconfigure it (set wrong password) - to verify that it actually communicate with your smtp server. -

Code changes needed so one country can be in multiple zones?

datakick replied to 30knees's question in Technical help

Not possible. Each country can be only in one zone. Entire system expects this behaviour. -

use 'attachments' but without attached file - how?

datakick replied to DRMasterChief's question in Technical help

Back to the original question: No, it's not possible to use the attachments without file. You could change the template code and hide the file attachment input, but that is not enough. The backend would not process the add/edit request -- it expects file, and it does a lot of work processing it. If you really want to (ab)use this attachment functionality, you could simply upload some small/dummy file. And then you can modify your front theme product template, and not displaying any attached files. -

That will be some 'security' measure installed on your server - firewall, antivirus, or something similar that blocks your server from downloading zip file. Or maybe write permissions for /modules/ directory

-

https://github.com/thirtybees/thirtybees/issues/1759 Cache problems. Try updating to bleeding edge

- 1 reply

-

- 1

-

-

I'm sure there are many. My datakick module can be used to generate xml feed for GMC. You can use channable module to export your content to channable, and from there you can push it to any number of third party services, including GMC. there is google shopping flux free module (https://github.com/d1m007/gshoppingflux) - there is some compatbility issue with thirty bees, see this PR: https://github.com/d1m007/gshoppingflux/pull/105/files and I'm sure there are many more

-

I'm afraid that would not work properly with watermark module.

-

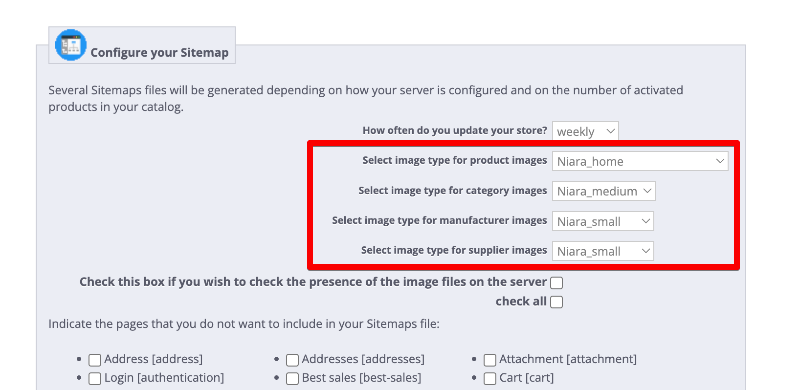

Use sitemap module, and configure it to use proper image variants: Add your sitemap to your google console, and wait for google to reindex.

-

Oh, sorry about that, that was a bug in installation zip file. Please uninstall the module, and then delete the module from your disk. After deletion, install it again. It will download the new, fixed package

- 1 reply

-

- 1

-

-

[solved] Installing TB fresh stops at setup step 3

datakick replied to Pedalman's question in Technical help

Also, before installation, ensure that your PHP has following extensions installed / enabled: Required: bcmath gd json mbstring openssl mysql (PDO only) xml (SimpleXML, DOMDocument) zip Recommended: imap (for allowing to use an IMAP server rather than PHP's built-in mail function) curl (for better handling of background HTTPS requests) opcache (not mandatory because some hosters turn this off in favor of other caching mechanisms) apcu/redis/memcache(d) -

[solved] Installing TB fresh stops at setup step 3

datakick replied to Pedalman's question in Technical help

I remember that some old version of thirty bees had issues when they encoutenr system compatibility problem during installation. Instead of displaying error message it displayed just blank error page. Try use 1.5.1 installation package instead. -

Oh yeah, it's funny. And sad. Mostly sad. Not funny at all, actually. You can try it yourself (on a testing environment, please). For example, view any order, and change vieworder in url bar to deleteorder https://domain.com/admin-dev/index.php?controller=AdminOrders&id_order=3&vieworder&token=<token> https://domain.com/admin-dev/index.php?controller=AdminOrders&id_order=3&deleteorder&token=<token> And hit enter. Order is deleted. This is how Delete button in list works now. It is simple GET request, guarded by confirmation popup. But when prefetching is enabled, the browser calls this GET url before you hit confirm. This particular problem can be fixed by fixing HelperList, and sending post ajax request instead. That would, probably, break a lot things, integration with third party modules, etc. You know, backwards compatiblity.

-

It can't be empty. Just put in some placeholder text like "New order created" and thats it

-

Ok, so that's the problem. Module checks that both email template variants exists. It shouldn't really do that, I'll create a ticket for that.

-

what about new_order.txt template?

-

Did you check content of the template? Maybe the template is empty (there was a bug in older version of thirty bees that generated empty template files sometimes) Is swedish the only lang in the store? If not, make sure the template exists for all languages - mailalert will use language that is assigned to employee preferences, so if the employee is using english for back office, the en variant of email template is used for them.